|

Hello! I am Jay Patravali, an MS Robotics Student at Oregon State University.

I hold a Bachelor's degree in Electronics and Communication Engineering from Vellore Institute of Technology.

As a Graduate Research Assistant, I am fortunate to be advised by Prof Fuxin Li. I work with him on the DARPA Machine Common Sense Project, investigating methods for fully-Unsupervised Object Recognition in natural environments. More specifically I work on following topics: Object Discovery, Video Object Segmentation, Detection and Tracking.

Google Scholar / Email / Github / Personal |

|

|

|

|

We present MetaUVFS as the first Unsupervised Meta-learning algorithm for Video Few-Shot action recognition. MetaUVFS leverages over 550K unlabeled videos to train a two-stream 2D and 3D CNN architecture via contrastive learning to capture the appearance-specific spatial and action-specific spatio-temporal video features respectively. MetaUVFS comprises a novel Action-Appearance Aligned Meta-adaptation (A3M) module that learns to focus on the action-oriented video features in relation to the appearance features via explicit few-shot episodic meta-learning over unsupervised hard-mined episodes. Our action-appearance alignment and explicit few-shot learner conditions the unsupervised training to mimic the downstream few-shot task, enabling MetaUVFS to significantly outperform all unsupervised methods on few-shot benchmarks. Moreover, unlike previous few-shot action recognition methods that are supervised, MetaUVFS needs neither base-class labels nor a supervised pretrained backbone. Thus, we need to train MetaUVFS just once to perform competitively or sometimes even outperform state-of-the-art supervised methods on popular HMDB51, UCF101, and Kinetics100 few-shot datasets.

|

|

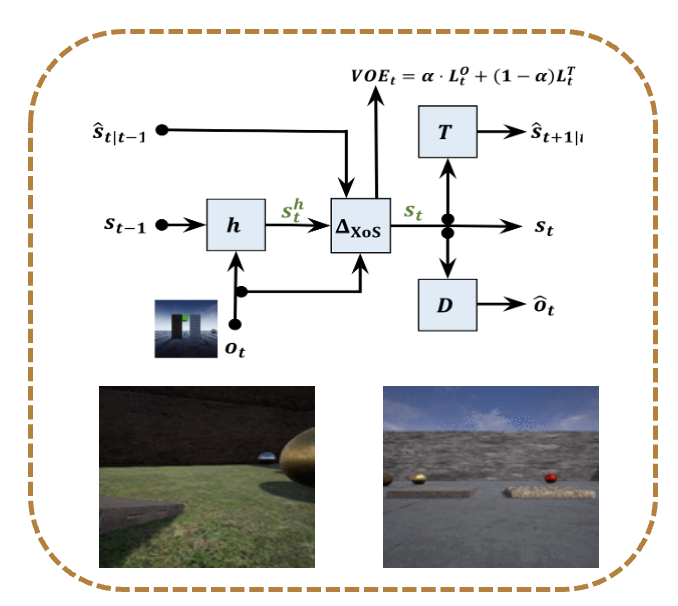

The goal of DARPA Machine Common Sense project is to embed visual common sense in machines. We develop agents that are evaluated on multiple tasks in simulated environments such as object-finding exploration, modelling physics via multi-object interactions, collisions, occlusions and in presense of gravity. I contribute to various elements in the perception stack such object detection, filtering, tracking and segmentation. I also develop novel algorithms for unsupervised video object segmentation. A subset of results "Learning Intuitive Physics by Explaining Surprise" was published at CVPR-W 2020.

|

|

Designed architecture for the first probabilistic GAN framework for generation of diverse pose predictions for occluded/uncertain joint locations in a given image. Developed software modules for network

architecture and training, data processing and experimentation. Extended this project to synthesize

and predict long motion sequences using generative modeling.

|

|

I designed a vision-based localization system for the Samsung self-driving car project. We use deep CNN's for classifying and detecting pole-like features from street-level imagery captured from the on-board cameras. These detections were used as landmarks for particle-filter based localization.

I worked on robotics systems tasks along with the research and software development to build this pipeline. Our objective was to learn better representations for vehicle egomotion.

|

|

In this work, I developed a 2D and 3D segmentation pipelines for fully automated cardiac MR image segmentation using Deep Convolutional Neural Networks (CNN). Our models are trained end-to-end from scratch using the ACD Challenge 2017 dataset comprising of 100 studies, each containing Cardiac MR images in End Diastole and End Systole phase. We show that both our segmentation models achieve near state-of-the-art performance scores in terms of distance metrics and have convincing accuracy in terms of clinical parameters. |

|

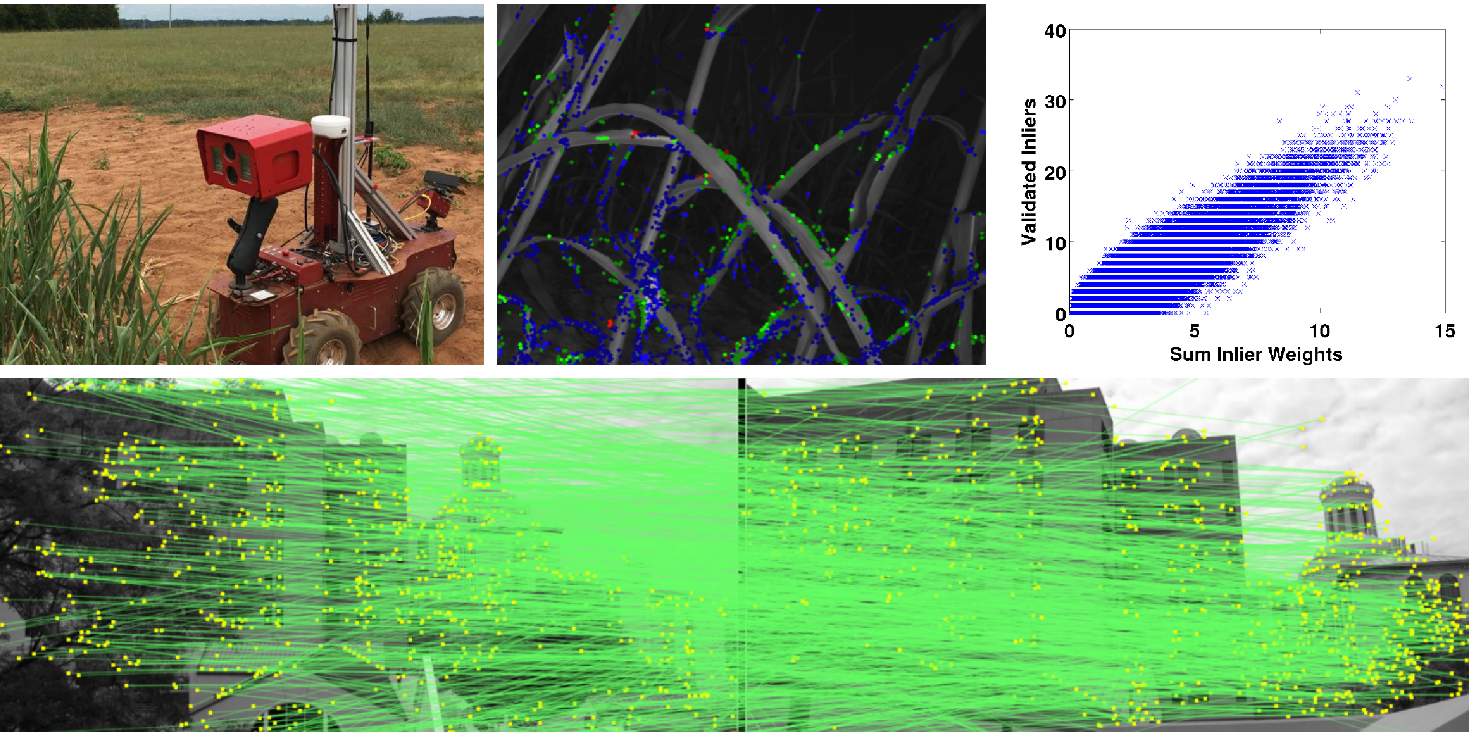

Many robotic applications that involve relocalization or 3D scene reconstruction, have a need of finding geometry between camera images captured from widely different viewpoints. Computing epipolar geometry between wide baseline image pairs is difficult because often there are many more outliers than inliers computed at the feature correspondence stage. We present a new method called UNIQSAC for ng weights for features to guide the random solutions towards high quality features, helping find good solutions. We also present a new method to evaluate geometry solutions that is more likely to find correct solutions. We demonstrate in a variety of different outdoor environments using both monocular and stereo image-pairs that our method produces better estimates than existing robust estimation approaches.

|

|

We consider the problem of robot exploration in unknown environments. We test

current exploration strategies in different map geometries and identify the failure cases

of these algorithms when subjected to different exploration parameters and sensor pa-

rameters. Based on evaluation of our results we generalize the robot behaviour in each

of the cases. In addition to this, we also propose a novel exploration algorithm that

exhibits improved robot exploration in terms of significantly shorter path length and

lesser energy consumption.

|

|

|