Tool Design

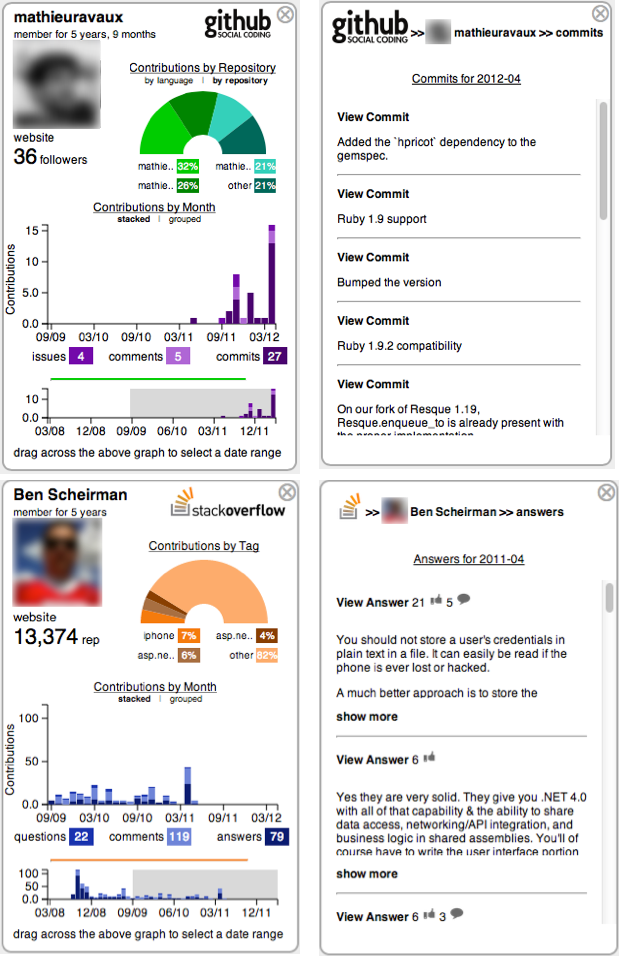

The key goals of Visual Resume is to present contribution histories of developers in such a way as to : (1) provide summaries of contributions in a manner, such that large amounts of data can be easily understood, (2) provide information in such way that it is easy to view and compare across different candidates, contributions, or sites, and (3) allow investigation of specific contributions, such that is it not only easy to access, but also to assess.

– A video of a walkthrough of the Visual Resume tool is here.

– A small scale demo (2 candidates) of Visual Resume tool is here.

– The source code of the tool is here, but the tool is not open source yet, as we are contemplating extension and commercialization of the tool

User Evaluation:

User Evaluation:

We conducted a scenario-based task analysis study to understand how Visual Resume can help in evaluating multiple candidates. The goal of this study is an evaluation of how participants used the information provided by the tool, and what other kinds of information did they seek when given a scenario where they had to make a choice from a set of potential job candidates. We asked the participants to think aloud during the study, where they verbalized their actions and the intention behind the actions

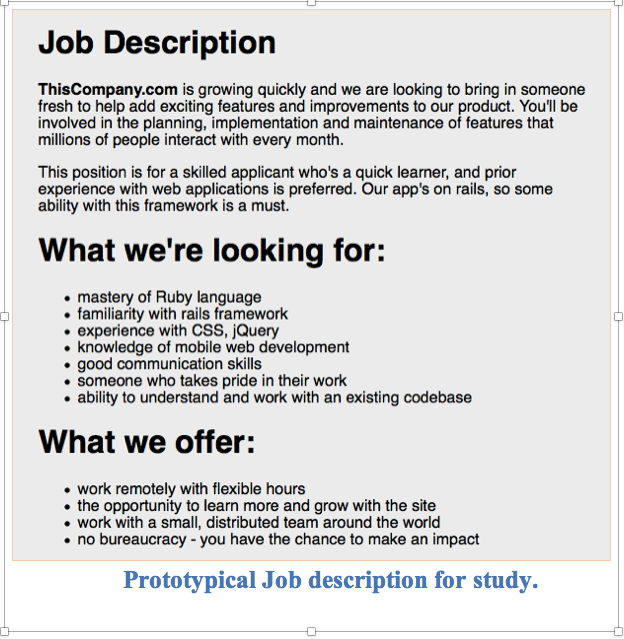

Prototypical job description: We created the job description to represent a typical job posting for web development positions. To do so, we reviewed postings on popular job posting sites including LinkedIn, Careers 2.0, and CareerBuilder [5]. Our posting included aspects that typically appeared across many postings, including: position description, job responsibilities, required qualifications, and benefits.

Task description: Participants were told that they were the technical lead and had to assess a set of candidates in response to their job advertisement (see above). Participants performed two types of tasks: (1) a filtering task where they selected two candidates from a pool of five, and (2) a focused comparison between the two selected candidates.

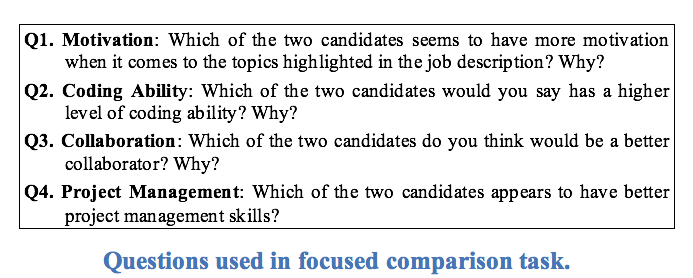

The filtering task was designed to solicit higher-level comparisons across a (large) set of candidates. This type of filtering is common in the early stage of job candidate evaluation . Next, participants were asked to perform focused comparisons of their two “best” candidates across four dimensions shown below (i.e., motivation, coding ability, collaboration potential, and project management abilities). These four dimensions were selected because they were found to be the most relevant in our formative study. Participants were asked to then select one of the two candidates along each of these dimensions.

Job candidate selection: We selected five potential job candidates for the study. These candidates were selected to represent typical GitHub and Stack Overflow users with some expertise in the topic areas indicated in the job description (Ruby and Javascript programming). To identify these candidates, we first extracted a dataset of GitHub participants with at least one commit to the Ruby on Rails project on GitHub. Next we queried Stack Overflow for these users to identify a subset of users with profiles and activity in the site. From this sample of 2300 common users in both communities, we then identified candidates that had reasonable activity on both sites (240 users). From this set, we randomly selected the five candidates for the study.

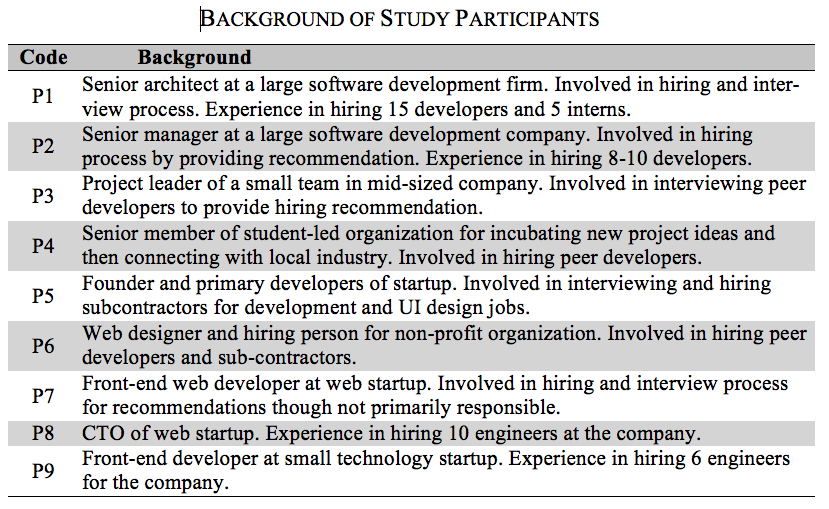

Study Participants: We recruited nine participants in our study. These participants were selected to represent individuals who had experience working in and recruiting for global organization or hiring from a pool of global candidates. We also recruited participants to obtain both corporate (P1-P3) and small software company participants (P4-P9). This was done because the software engineering practices used in established corporations vary from those used in lean startup operations, where agile methods are more prevalent. Further, we included participants who hire for their teams as well as those who interview their peer developers. Differences in typical development practice may in turn suggest different cues for evaluating job candidates.

Data Analysis: We coded transcripts from observations, notes, and participants’ think-aloud feedback to identify key strategies or patterns in how candidates were evaluated,. We first identified instances of the types of cues that participants used to judge candidates’ expertise in the transcripts. We then compared each instance with previously identified hiring cues (discussed in Section II) and grouped those that were conceptually similar. Finally, we identified which tool features were useful to the participants in evaluating candidates.